Back in the wild days of the early web, it was pretty clear that someone needed to get their shit together and tell people how it was and how it should be. Everyone had their strange little additions to HTML to twist and turn it different ways, and there was no clear delineation between right and wrong. Are tables OK? Are frames? What about iframes?

Thankfully, the W3C was there to clear things up and make sure that there was a clear path between right and wrong.

Thanks to the magnificent stewardship of the W3C, we've seen reference browsers so that it's clear how web pages should look.

Just think - if it weren't for the W3C, we'd be stuck with goofy tags like and marquee that were added in by Netscape and Microsoft respectively to this day!

Thank god they locked that shit down and slapped people's hands when they tried to add inane crap like that in, right?

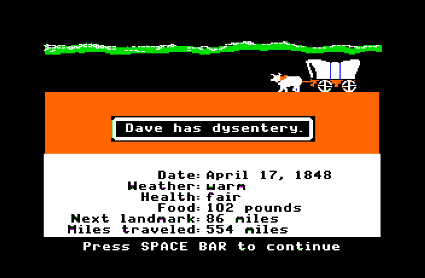

Except, judging by the fact that you're blinking and rubbing your eyes and probably cursing me for being such a dick and stuffing those two proprietary tags in there and your browser rendering them, they failed.

CSS was put out with the best of intentions, but when it comes to CSS, you can count on one thing - it will not work right the first time in all browsers. Ever.

Which doesn't stop them from diving ever deeper into never-never land and releasing specs on how to generate tables using nothing but CSS and magic to the public. Not that CSS1's all that well-supported. Or CSS2. But don't worry, just use CSS3 and suddenly everything gonna be OK! Oh, if only we had some way to express tabular data that's supported in all browsers. If only.

The simple use cases don't work right but it doesn't stop them from putting out ever more elaborate specs based deeper and deeper in fantasy land. Worse yet, you'll find supporters who say things like...

The difference is that an HTML table is semantic (it describes the data). The 'table' value of the display: property has no semantic meaning, and simply tells the browser how to display something. There is a huge difference there.

You dare to question the sanity of an elaborate hand-waving solution that doesn't work in, oh say, 25% of browsers out there when there's a simple way that works reliably?

It's only silly if you refuse to distinguish between content and layout.

In a sense, this is correct. Of course, this is in the same sense as me saying "I can go ahead and chop my legs off because it's silly to walk when jetpacks and rocket cars are obviously the way of the future."

I will give the W3C credit - I didn't know that there was a terser abbreviation for You Aren't Gonna Need It than YAGNI, but it looks like "W3C" will do.

So why a decade+ of fail? They did start churning out the XML spec back in 1996, and they managed to not fuck that up too badly (probably despite their best efforts). I know, LISP people - XML is a mangled version of sexprs. I have no idea what that means, but I will nod and slowly back away. I know, internet. XML is the worst format ever invented. Angle bracket tax! Excessively verbose! Have you seen the stupid things that people have done with it? Why didn't we settle on JSON or YAML?

But you know what? I can produce XML here and have confidence that whoever I hand it to is going to be able to do something meaningful with it. It's readily (if not speedily) parsed on just about every platform out there. For a lowest common denominator intercommunication, I gotta admit - it doesn't suck much worse than any other option available.

That doesn't excuse the W3C from strapping on the complicator's gloves and trying to make it do things that it utterly fails at. SOAP? OK in theory, but were they not aware that interchange was going to be happening over public networks and maybe encryption was something that should be built into the messaging by default? Violate the spec and you've got what you want. Was there a point to the spec in the first place?

Oh and did I mention that you can count on SOAP calls across heterogenous clients to be a massive pain in the balls? When we started on our new project, calling across the wire to a service written in Java, we had two options - XML using their little format or SOAP. I put my foot down on using XML and refused to say more than "the only benefit to using SOAP will be hearing me laugh a lot at whoever has to implement it, because I won't even try it." I didn't even need to drop a "told you so" when our SOAP services started failing for no particularly good reason other than it was written in WCF and the consumer had the temerity to be written in .Net 2.0.

And SAML? Godawful. Over-wrought. A solution in search of a problem. A magnificently high face-to-palm ratio. I brushed up against that turd once upon a time and I can still taste the bile in the back of my throat because of it. For that one alone I would like to firebomb the collective crotches of the W3C's membership.

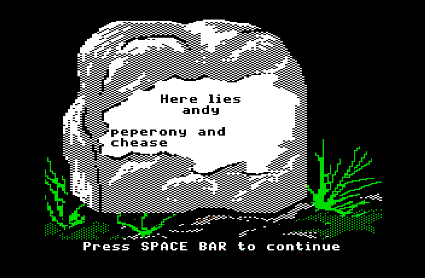

But they keep on trucking and suckers keep slurping it up.

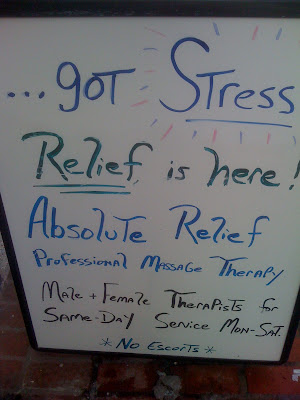

I'm a caveman and a terrible designer and I write pages using tables instead of divs. On the bright side, I don't spend days lost in trying to figure out why float isn't working in IE6 when it is in Firefox and now it's working in IE6 and Firefox and not IE7 and... who else is up for a trip over to W3C headquarters to visit unimaginable horrors on their collective genitalia?

digg

digg reddit

reddit del.icio.us

del.icio.us