OK, forget reading Peopleware and The Mythical Man-Month. If you want to manage developers, there's only one - no wait! - two things you need to do.

- Be born in the late 70's/early 80's

- Play The Oregon Trail on an Apple II

That's it! If you can make it to the end of the trail, you know everything that you need to know about successfully managing software, so go successfully manage the shit out of a project or three!

Wait. You're still here? I should go on? OK.

You have your hunters, guys who strike out in hopes of lucking into a bounty of delicious, delicious meat. Speaking authoritatively as someone who's never hunted or caught anything of note while fishing, I'll say that a good hunter operates off of instinct, innate ("animal") intelligence, skill and luck. Legends tell the tale of the hunter who struck off into the deep of the jungle with nothing but a knife and came back carrying a gorilcopterous (work with me people, I live on the edge of the tundra) or some big tasty animal that lives in the jungle0.

Then you've got the gatherers who plant crops and (hopefully) eventually got to harvest the bounty. It takes a deep investment in time and resources to see a field from seed to harvest, and even then there's any number of natural disasters that can beset you along the way. This isn't to say it's easy. On some level, we all understand how plants grow. They eat sunshine, water and carbon dioxide and crap oxygen and chlorophyll, right? On another level, there's a hell of a lot that goes into it - seeds cost money. Machines to till and harvest cost money. Irrigation costs money. You've got to know when to plant. What to plant. When to rotate your crops.

Developers are an impatient bunch, which means that we don't generally have the patience for the small, far-off, rewards and repetitive work that it takes to grow a crop. But we get hungry, so we're going to strike out and hunt, hoping to bag the next seminal idea. OK, it's probably not a seminal idea by any stretch of the imagination, but for a brief moment in time, you really feel like you've crafted something brilliant and have found something more than you could have hoped for, something that's somehow bigger than you could have imagined.

But eventually, the quarry of the once-fertile plains and forests of imagination!!!!!!! will run dry. Your hunters will pick up on the fact that there's no more ideas to be hunted, only tedious fields that demand regular, monotonous maintenance, and they'll migrate elsewhere. This is all well and good, but every now and then wouldn't you like a steak to go with those potatoes?

But again, I get ahead of myself - let's take a step back to see what a dated educational game has to do with any of this.

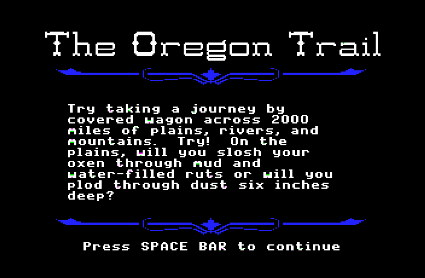

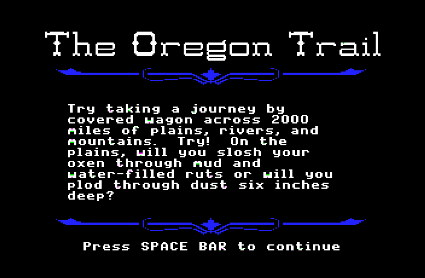

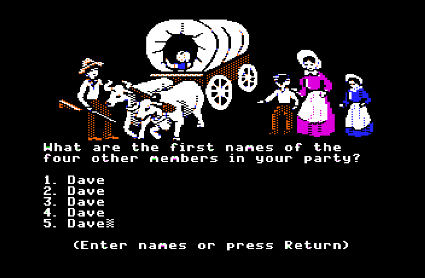

In The Oregon Trail, you're in charge of seeing a family1 make the trip on the Oregon Trail from Missouri2 to Oregon3.

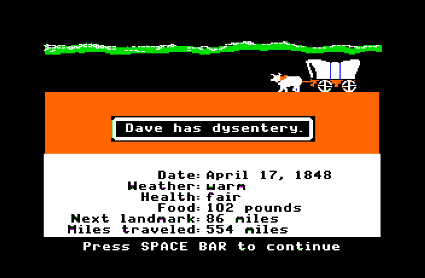

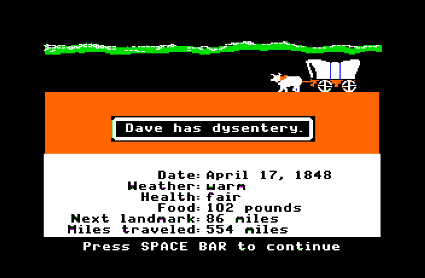

You notice how they emphasize "try"? It's because of a simple fact that you don't want to admit to yourself - no matter how simple it seems like it should be, that shit is fucking hard to accomplish4. To the outsider, it's as simple as getting from point A to point B. That's really all there is to it, right?

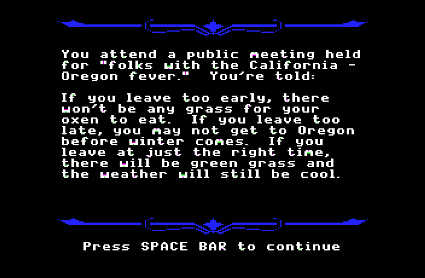

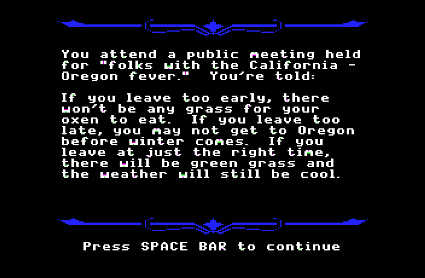

Go figure - there's nuances that you hadn't even considered when you started out. Could this mean that you'll eventually discover that there are nuances upon nuances? This makes the decision of something that seems as simple as when to leave a dizzyingly difficult one.

Leave early and you'll be freezing and making slow, painful, progress5. Leave too late and you'll face the unenviable, super-difficult, task of over-wintering6. Face it - you've tried to make a trip like this before and you've probably still got the bruises and scars from the last one, but you keep telling yourself that this time will be better.

You need supplies to make the trip. Oxen to move your cart7, clothing to keep you warm8, food9, replacement parts10... and bullets for hunting11. And naturally, you're constrained to a budget that's tighter than you'd like.

Making matters worse (you mean it gets worse?), you're probably making the trip with a bunch of egomaniacal (wo)man-children. I mean, we all strive to be egoless in our tasks, but we all take an undue amount of pride in an elegant solution and invariably take it the wrong way when someone points out the glaring flaws in our implementation. Or maybe I'm the only one, who knows?

But... about those supplies. Some of them are effectively interchangable. If you've got a good hunter (you have a good hunter, right?), you can exchange bullets for food in the wild - that's the biggest bang (pun unintended) for your buck. Then you can trade food for just about anything else you need along the way12. You can run pretty lean-and-mean13 but you need enough food to keep your bellies full.

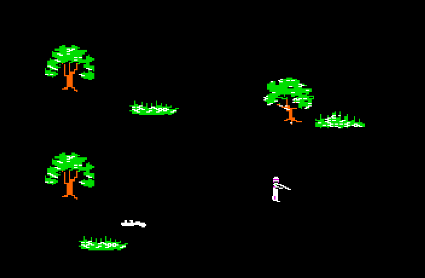

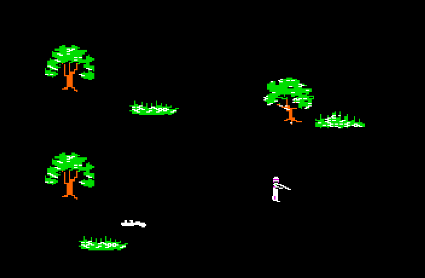

You're going to want to hunt (because it's fun!), but not every hunt can be a winner.

What can I tell you? Times is tough out there. Even with a gang of elite hunters firing on all cylinders and a pile of food (ideas!) as high as the eye can see, sometimes nature just doesn't smile on you. As in any pursuit, it's entirely seemly that you can do everything right and still fail.

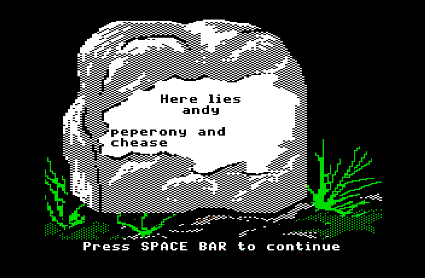

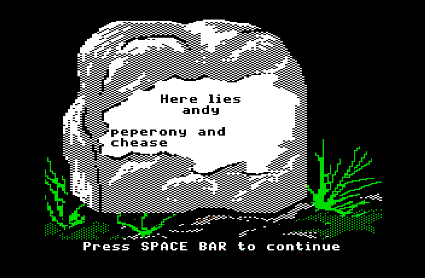

Not only are times tough as hell, but you're fairly constantly reminded of your own failures and the failures of others in a big, somber way.

Is there any wonder why Einstein up there didn't make it? Wasn't cut out for it. You can't teach people how to hunt - they're born with it or they'll never get it, now matter how blue in the face you get trying to explain it to them.

The trip itself? It's possible that your people are entirely happy in Missouri right now. You can crack that whip all they want, but if you don't instill a powerful longing in them to reach the promised land of Oregon, you'll never make it. In fact, you might find yourself unwillingly invited to a Donner Party. And not to ruin the surprise, but everyone at the party will be eating but you.

0. you know, your run-of-the-mill whispered about in legends superhacker

1. your development team

2. the start of the project

3. the project's end (you do have a concrete end-point in mind, right?)

4. #9 on Software's Classic Mistakes - Wishful Thinking

5. sometimes you have the toolchain you need to complete your project, other times you're kind of winging it as you go along - bootstrapping too much of your own technology stack only shrinks the chances that you'll ever make it

6. running out of funding

7. computers

8. technology stack - compiler, language, yadda yadda

9. ideas

10. source control and the other niceties of modern development (build server, unit tests)

11. ain't nothing more dire than running out of bullets - there's no metaphor here

12. dumbest way of describing the point of open source ever?

13. agile??!?

digg

digg reddit

reddit del.icio.us

del.icio.us